Blogs

Consulting

The Process - the (usually) invisible yet crucial underlying matrix that guides us through our daily and also business lives; it can be found "everywhere" whether you're making a cup of tea or managing a complex order flow, it's always necessary to understand and follow the best path(s) towards the desirable outcome. Even the simpler processes, like making of cup of tea, can have many different variations "significantly" influencing the final brew, as our friends from various parts of the world can attest (http://goo.gl/uQk8kU, https://goo.gl/EoKgLa).

Yet these ordinary "processes" turn pale in comparison to what is going on in the business/IT landscape - multiple variations of inputs, outputs and in-betweens, disparate states, speeds and parallel paths, application of metrics and KPIs, actors - all this must be expected when trying to master the processes in the business and IT domains.

Traditionally the organizations apply the "static" method of understanding the process - reading the documentation and workshops with process owners, all this resulting in drawing and describing the process diagrams (e.g., in UML, BPMN or other popular annotations). Although still relevant, this method is considerably limited by its slightly outdated approach and ultimately fails to deliver up-to-date, full 360' view of the process and all surrounding elements (systems, actors, limitations, KPIs).

The main problem with this approach is that it takes lot of time to understand what is going on - there is usually scarce or no documentation available at all, the process owners are frequently very busy or also completely unavailable. So when the resulting process is finally pieced together it usually reads as "sunny-day" process description that might be very different to what is actually really going on. Additionally, this analysis might completely miss various crucial workarounds used by the "crafty" users, hidden process loops and more importantly the unofficial team relations/interactions (when someone is covering tasks that they probably shouldn't). Finally, it's difficult to measure the actual process performance on the basis of this as-is model as additional effort is needed to first implement the KPIs, then jumpstart the collection from the identified extraction points and finally generate the performance reports.

But the famous "wind of change" has finally reached this domain and brought new revolutionary techniques of process mining (backed up by almost incomprehensible scientific white papers ;-) that can tremendously complement the original approach. The main idea is that every process leaves a trace in the form of information available as log files from all the IT systems that support the process - whether these are dumps from interfaces, (trouble) tickets from CRM system or input/output records of some scripts - on the basis of all these inputs, the process mining techniques can give surprising and most importantly unbiased insight into the processes which can decidedly speed up the understanding of the process and highlight possible areas of improvement.

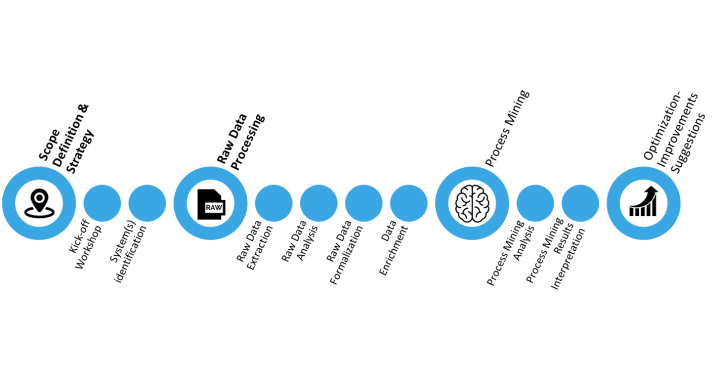

The processing of these logs can be divided into multiple stages:

This combined approach of analysis based on the static and mining techniques assures complete and up-to-date process overview that is based not only on some recollections of process owners or incomplete documentation, but also on actual hard data; this approach is also very fast as it reduces the usual "endless" workshops to minimum.

The gathered insight can be then further used in multiple ways - the process team can start to devise quick remedies for discovered or highlighted issues or even try to completely redesign the process. The available quantification of the process performance will also help to renegotiate the present KPIs and metrics; even the performance of the teams can be also evaluated on the basis of this understanding.

But wait, the savvy IT manager might interrupt, don't I have all of this already in my operational analytics / reporting tools?! The answer is - "partially yes, but mostly no". It's true that the dashboard of the operational BI tools somewhat overlaps with some values obtained through the process mining approach (especially in the statistics/performance area), but the main difference is that the operational tools core information is about the systems and what each of them did and when, but as the process transcendently spans over many different systems and actors, it is almost impossible to easily put together similar process sequence view from these operational reports. On other hand, the process analysis provides direct information related to the actual process - who did what, when and how many times and most importantly in what sequence, including (unwanted) loops. And the information from operational analysis tools can tremendously support the process discovery process, but as of now they are two different beasts.

We at Excelacom do not only like good and properly prepared tea (Earl Grey with a bit of milk, please), but we also love to improve and streamline processes of our customers. Whether it is the "basic" end-to-end process discovery or redesign of as-is to better to-be process together with the underlying IT architecture, our deep expertise in process analysis can do it all, just let us know.

In June, the Excelacom team also participated at annual Process Mining Camp conference in Eindhoven where our specialists listened and compared experiences with other process aficionados and experts. Thanks to this gathering our consultants will be able to assist our customers even better in their quest for ultimate process understanding and optimization.

For more information on how Excelacom can help you streamline your everyday processes, email us at marketing@excelacom.com.